Natural Language Processing (NLP) is an artificial intelligence process used to by computer systems to find the meaning of text content. This allows the human users to ask questions using sentences written in a natural way using normal sentences. Successful NLP systems will allow users to ask questions in different ways and still be able to understand the meaning of the question being asked.

In this article we use Natural Language Processing (NLP) to create a sample Java application that can find weather data using ‘natural language’ text questions. This allows users to find weather data using queries such as:

- Tell me the weather in Tokyo, Japan on the 6th January 1975.

- What will the weather be in London, England next Wednesday?

- What was the weather in Washington,DC last 4th July?

For the full source of this example, download it from our GitHub repository: https://github.com/visualcrossing/WeatherApi/tree/master/Java/com/visualcrossing/weather/samples

Steps to create a simple NLP weather answer bot

In our sample, we will restrict our NLP processing to identifying if the user is asking a question about weather data. Supported questions will be find out the upcoming weather forecast or looking up historical weather observations.

To do this, we need to identify two pieces of information:

- The location for which the user is attempting to find weather information.

- The date or date range for the weather data.

Once we have found this information, we can then look up the weather data. To do this, we will use the Visual Crossing Weather API. This Timeline Weather API makes it easy to look up both past and future weather data as it automatically adjusts to the date range we ask for. If we ask for dates in the future, it will give us the weather forecast. If we ask for data in the past, then it will give us historical weather observations.

To run the sample code, you will need a free Weather API key. If you don’t have an API key, head over to the free sign up page to create your key.

Step one – setting up the NLP Processing

There are a number of excellent open source Java libraries that can be used for NLP processing. These include Apache OpenNLP and the CoreNLP created by the Standard NLP Group. In this sample, we will use CoreNLP as it provides a simple way to get started and includes pre-trained models for many of the questions we need the API to answer.

Identifying the location and dates

We will use our NLP processing to work to find two pieces of information from the user questions so we can find appropriate weather data – the location and dates. We will therefore focus on appropriate using named entity recognition to find entity mentions within the text. Entity mentions represent items of particular interest within the text such as a person, location, date or time.

We are looking for entity mentions that identify the target location of the weather data (such as city, state or province or country) and the dates for which we should find the weather data.

Therefore we need the NLP processor to be able to the location from the text. Let’s consider a couple of examples.

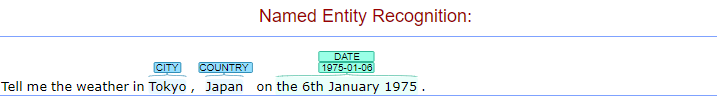

Tell me the weather in Tokyo, Japan on the 6th January 1975.

In this case, the processor should identify the location is Tokyo, Japan and that the user has provided an exact date – 6th January 1975. CoreNLP includes a demo site that provides a graphical interpretation of the text:

We can see that the library correctly identifies the location as the combination of a city and country and then identifies and parses the intended date correctly.

We will be able to pass the location of ‘Tokyo, Japan’ and the date to the Weather API to look up the weather information.

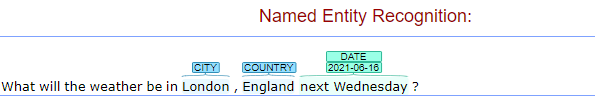

What will the weather be in London, England next Wednesday?

The location in this case is London, England. The date is a little trickier as it’s not a simple date (although there are many ways a user might ask for a date so even that is not a trivial exercise!) We would like the NLP to be able to interpret ‘next Wednesday’ as asking for date and then convert it to an exact date.

If we run the above text through the online text, we can see that again the library correctly finds the location and intended date:

We can see the the NLP processing as correctly understood the concept of ‘next Wednesday’. From there, the library finds the next Wednesday after the current date.

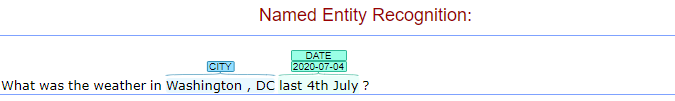

What was the weather in Washington,DC last 4th July?

If we look at our final example, again we see that the NLP library correctly processes the location and target date:

Again the library has been able to identify the City of Washington,DC and interpreted the ‘last’ to refer to the last occurrence of the 4th of July.

Step two -creating the NLP Java code

We are now ready to create the first part of our Java sample. Our simple will be a Java application that prompts the user for text, processes the text for the requested location and dates and then looks up the weather data. This will be processed via the Java system.out to keep things simple.

Before jumping into the code, first download the required libraries from CoreNLP. These samples were written using version 4.2.2 but any recent version should work.

If you configure your Java project using maven, you can find the require maven dependencies here. The maven dependencies we added were:

<dependency>

<groupId>edu.stanford.nlp</groupId>

<artifactId>stanford-corenlp</artifactId>

<version>4.2.2</version>

</dependency>

<dependency>

<groupId>edu.stanford.nlp</groupId>

<artifactId>stanford-corenlp</artifactId>

<version>4.2.2</version>

<classifier>models</classifier>

</dependency>Setting up the NLP Pipeline

The first part of our code is the main Java method. In this method we first set up the CoreNLP pipeline and create a basic loop for the user to enter text. For more information on the set up and configuration of CoreNLP, see the CoreNLP API samples.

public static void main(String[] args) {

// set up pipeline properties

Properties props = new Properties();

// set the list of annotators to run

props.setProperty("annotators","tokenize,ssplit,pos,lemma,ner");

// set a property for an annotator, in this case the coref annotator is being

// set to use the neural algorithm

props.setProperty("coref.algorithm", "neural");

props.setProperty("ner.docdate.usePresent", "true");

props.setProperty("sutime.includeRange", "true");

props.setProperty("sutime.markTimeRanges", "true");

// build pipeline

System.out.printf("Starting pipeline...%n");

StanfordCoreNLP pipeline = new StanfordCoreNLP(props);

System.out.printf("Pipeline ready...%n%n");

}In line 5, we have reduced the numbers of annotators for our sample because we are mainly focused on the output of the ‘ner’ annotator. ‘ner’ is short for ‘ NERCombinerAnnotator’ which is responsible for the named entity processing we need to find the locations and dates. The remaining annotators are required for ner to function.

We now add a loop to the above main method to allow the user to enter text and have the sample process the data

public static void main(String[] args) {

// set up pipeline properties

Properties props = new Properties();

// set the list of annotators to run

props.setProperty("annotators","tokenize,ssplit,pos,lemma,ner");

// set a property for an annotator, in this case the coref annotator is being

// set to use the neural algorithm

props.setProperty("coref.algorithm", "neural");

props.setProperty("ner.docdate.usePresent", "true");

props.setProperty("sutime.includeRange", "true");

props.setProperty("sutime.markTimeRanges", "true");

// build pipeline

System.out.printf("Starting pipeline...%n");

StanfordCoreNLP pipeline = new StanfordCoreNLP(props);

System.out.printf("Pipeline ready...%n%n");

//loop for ever asking the user for text to process

try (Scanner in = new Scanner(System.in)) {

while (true) {

System.out.printf("Enter text:%n%n");

String text = in.nextLine();

try {

processText(pipeline, text);

} catch (Throwable e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}

}After the user enters text, the sample passes the configured pipeline and the text to a second method ‘processText’ to process the text.

Processing the text for location and date

private static void processText(StanfordCoreNLP pipeline, String text) throws Exception {

CoreDocument document = new CoreDocument(text);

// annnotate the document

pipeline.annotate(document);

if (document.entityMentions()==null || document.entityMentions().isEmpty()) {

System.out.println("no entities found");

return;

}

String city=null;

String state=null;

String country=null;

LocalDate startDate=null;

LocalDate endDate=null;

for (CoreEntityMention em : document.entityMentions()) {

if (DATE.equals(em.entityType())) {

Timex timex=em.coreMap().get(TimeAnnotations.TimexAnnotation.class);

Calendar t1=timex.getRange().first;

if (t1!=null) {

startDate=LocalDateTime.ofInstant(t1.toInstant(),

ZoneId.systemDefault()).toLocalDate();

}

Calendar t2=timex.getRange().second;

if (t2!=null) {

endDate=LocalDateTime.ofInstant(t2.toInstant(),

ZoneId.systemDefault()).toLocalDate();

}

} else if (LOCATION_CITY.equals(em.entityType())) {

city=em.text();

} else if (LOCATION_STATE_OR_PROVINCE.equals(em.entityType())) {

state=em.text();

} else if (LOCATION_COUNTRY.equals(em.entityType())) {

country=em.text();

}

}

String location="";

if (city!=null) location+=location.isEmpty()?city:(","+city);

if (state!=null) location+=location.isEmpty()?state:(","+state);

if (country!=null) location+=location.isEmpty()?country:(","+country);

if (location==null || location.isEmpty()) {

System.out.println("no location information found");

return;

}

timelineRequestHttpClient(location, startDate, endDate);

}First the code processes the text by creating a document (line 2) and then annotates it using the pipeline we configured above (line 4). After annotation is complete we can then examine the results to find out if we have any of the requested entity mentions.

To find the possible entity mentions, we loop through the list found during the processing in line 16. We use the entity type to help identify the information we receive. If we find we have a date, we use the Timex instance to find the start and end date (lines 17-28).

After that, we look for location related annotations (city, state or province name or country). If we find any of them, we remember them.

After all the entities have been examined, we construct a single location string that represents the combination of the the location entities that we found (just like an address is a combination of city, state, country etc). We need a single string to pass this location information to the Weather API to help the API identify the exact location being requested.

Retrieving the information from the weather API

We now have the location and date or dates range for our request so we can proceed to read weather data from the Weather API. For more information on how to use the Weather API in Java and for different ways to read data over the network, check out How to use timeline weather API to retrieve historical weather data and weather forecast data in Java.

We need a few more maven dependencies in our case to handle the network request and the JSON parsing:

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

<version>4.5.12</version>

</dependency>

<dependency>

<groupId>org.json</groupId>

<artifactId>json</artifactId>

<version>20200518</version>

</dependency>The Weather API is used to request the weather data in JSON format:

public static void timelineRequestHttpClient(String location, LocalDate startDate, LocalDate endDate ) throws Exception {

//set up the end point

String apiEndPoint="https://weather.visualcrossing.com/VisualCrossingWebServices/rest/services/timeline/";

String unitGroup="us";

StringBuilder requestBuilder=new StringBuilder(apiEndPoint);

requestBuilder.append(URLEncoder.encode(location,

StandardCharsets.UTF_8.toString()));

if (startDate!=null) {

requestBuilder.append("/")

.append(startDate.format(DateTimeFormatter.ISO_DATE));

if (endDate!=null && !startDate.equals(endDate)) {

requestBuilder.append("/")

.append(endDate.format(DateTimeFormatter.ISO_DATE));

}

}

URIBuilder builder = new URIBuilder(requestBuilder.toString());

builder.setParameter("unitGroup", unitGroup)

.setParameter("key", API_KEY)

.setParameter("include", "days")

.setParameter("elements", "datetimeEpoch,tempmax,tempmin,precip");

HttpGet get = new HttpGet(builder.build());

CloseableHttpClient httpclient = HttpClients.createDefault();

CloseableHttpResponse response = httpclient.execute(get);

String rawResult=null;

try {

if (response.getStatusLine().getStatusCode() != HttpStatus.SC_OK) {

System.out.printf("Bad response status code:%d%n",

response.getStatusLine().getStatusCode());

return;

}

HttpEntity entity = response.getEntity();

if (entity != null) {

rawResult=EntityUtils.toString(entity, Charset.forName("utf-8"));

}

} finally {

response.close();

}

parseTimelineJson(rawResult);

}The first part of the code creates the Timeline Weather API request URL (line 3-25). First the location is added to the request. The dates are added if necessary. If a single date is requested then that is added by itself otherwise two dates will be added. If no date is added to the request, the 15 day forecast will be returned.

A single date request will look like this:

https://weather.visualcrossing.com/VisualCrossingWebServices/rest/services/timeline/Washington,DC/2020-07-04?unitGroup=us&key=YOUR_API_KEY&include=dates&elements=datetimeEpoch,tempmax,tempmin,precipA date range request will look like this:

https://weather.visualcrossing.com/VisualCrossingWebServices/rest/services/timeline/Washington,DC/2020-07-04/2020-08-04?unitGroup=us&key=YOUR_API_KEY&include=dates&elements=datetimeEpoch,tempmax,tempmin,precipNote also the use of the optional ‘elements’ and ‘include’ parameters. These parameters are used to filter the amount of data that is returned from the request.

include=dates tells the API to only give us date level information (otherwise hourly information will also be included).

elements=datetimeEpoch,tempmax,tempmin,precip instructs the API to only return those four pieces of weather data. Other information such as wind, pressure etc will not be included.

Displaying the weather data

The final piece of the code displays a very simple table of weather data to the command line:

private static void parseTimelineJson(String rawResult) {

if (rawResult==null || rawResult.isEmpty()) {

System.out.printf("No raw data%n");

return;

}

JSONObject timelineResponse = new JSONObject(rawResult);

ZoneId zoneId=ZoneId.of(timelineResponse.getString("timezone"));

System.out.printf("Weather data for: %s%n",

timelineResponse.getString("resolvedAddress"));

JSONArray values=timelineResponse.getJSONArray("days");

System.out.printf("Date\tMaxTemp\tMinTemp\tPrecip\tSource%n");

for (int i = 0; i < values.length(); i++) {

JSONObject dayValue = values.getJSONObject(i);

ZonedDateTime datetime=ZonedDateTime.ofInstant(

Instant.ofEpochSecond(dayValue.getLong("datetimeEpoch")), zoneId);

double maxtemp=dayValue.getDouble("tempmax");

double mintemp=dayValue.getDouble("tempmin");

double precip=dayValue.getDouble("precip");

System.out.printf("%s\t%.1f\t%.1f\t%.1f%n",

datetime.format(DateTimeFormatter.ISO_LOCAL_DATE),

maxtemp, mintemp, precip );

}

}This code parses the incoming JSON data from the Weather API (line 8). From there, the code loops through the values in the days array and extracts the datetime, tempmax,tempmin and precip values from each day.

The time is processed by reading the date using the seconds since epoch and supplying the location time zone ID (line 21). This create a ZonedDateTime instance.

Questions or need help?

If you have a question or need help, please post on our actively monitored forum for the fastest replies. You can also contact us via our support site or drop us an email at support@visualcrossing.com.